How is the development of AI helping people with disabilities?

The third episode of “AI for The Underdogs” will cover GIST’s gesture dataset.

*This dataset has been collected and annotated as part of Datumo’s <2021 AI Training Data Sponsorship Program> and is downloadable from Datumo’s Open Datasets website.

![]()

Who would benefit from this dataset?

Creating this dataset has been part of GIST’s research project to enable smoother communication between people with and without disabilities through gestures.

People with particular disabilities often face challenges in communicating with others. For those with severe disabilities, it may be difficult to use sign language, which makes it very difficult to communicate with their families or guardians. In order to make communication easier, GIST and Datumo together created dataset of Korean symbolic gestures based on sign language to complement the communication process of those with severe disabilities.

How did Datumo create this dataset?

All data were collected and labeled using Datumo’s crowd-sourcing platform, Cash Mission.

Total of 21,020 video datasets(205 categories) of gestures were created- each gesture was repeated five times per video.

The most important aspect in training AI models is having dataset of high accuracy and variety. Due to Datumo’s 200K crowd-workers from Cash Mission and strict guidelines, we were able to achieve accurate and numerous data.

- Main apparatus: DATUMO’s crowd-sourcing platform “Cash Mission”

- Standards and means of data collection and guidance set, based on client’s needs

- Project designed and guidelines (utilizing “Sondam” video tutorials) and tutorials set for crowd-workers

- Pilot test carried out before the actual project for assurance

- Collected “Sondam” video data via crowd-workers

- Metadata tagging carried out by crowd-workers

- Final data delivered after modification and inspection by in-house workers

Dataset Specification

- 4,204 video datasets on 205 categories

- Total of 21,020 video datasets of gestures built- each gesture repeated five times per video produced by a crowd-worker

The gesture dataset was created based on Datumo’s 4 core values:

[ ACCURACY / CONSISTENCY / BALANCE / COVERAGE ]

The dataset consists of a number of videos of people acting out the gestures in order to train the relevant AI model without any error.

“Sondam”, which is the name of the gestures, could be used to train gesture recognition AI models or for educational games to teach the gestures to those who need to use them.

This is also a meaningful attempt as this is the first benchmark dataset of the kind in Korea.

The video below is of the AI model developed using Sondam gestures. The model recognizes the user’s movements and teaches particular gestures.

Development of technology may not equally benefit everyone. However, Datumo and Wesee hope that the dataset and technology we develop could help anyone who needs them.

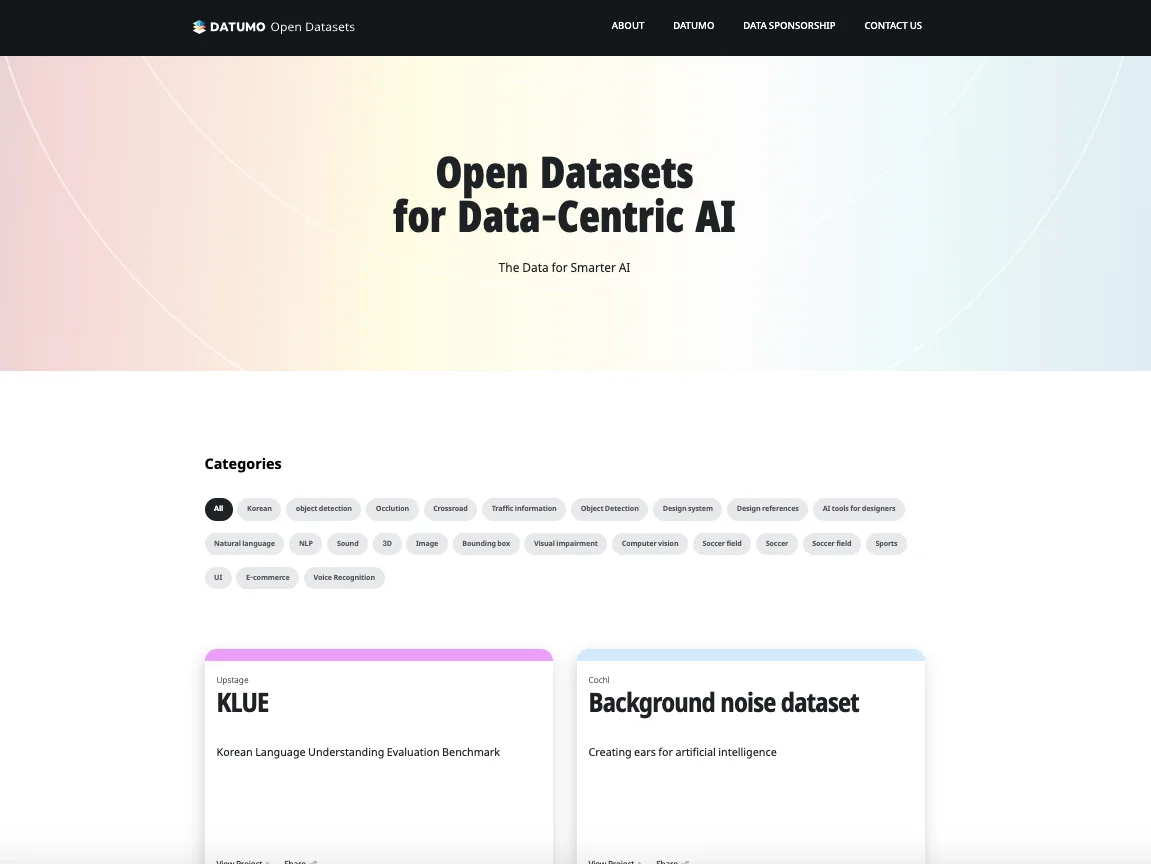

You can download this dataset for free from Datumo’s “Open Datasets”

Open Datasets for Data-Centric AI

The above datasets can be downloaded for free through DATUMO ‘OPEN DATASETS’.

CC BY-SA

Reusers are allowed to distribute, remix, adapt, and build upon the material in any medium or format, even commercially, so long as attribution is given to the creator. If you remix, adapt, or build upon the material, you must license the modified material under identical terms.

https://creativecommons.org/licenses/by-sa/3.0/deed.en