Depth Estimation SOTA Model Using Stable Diffusion: Marigold

While NLP is advancing so quickly, what about other fields? The release of text-to-image models in 2022 marked the mainstream adoption of generative AI. Models like MidJourney and DALL-E generated excitement with their capabilities and have since paved the way for advancements in models that generate not only images but also videos and 3D content.

Today, we introduce Marigold, a model that has achieved state-of-the-art (SOTA) results in depth estimation using Stable Diffusion.

Depth Estimation Models

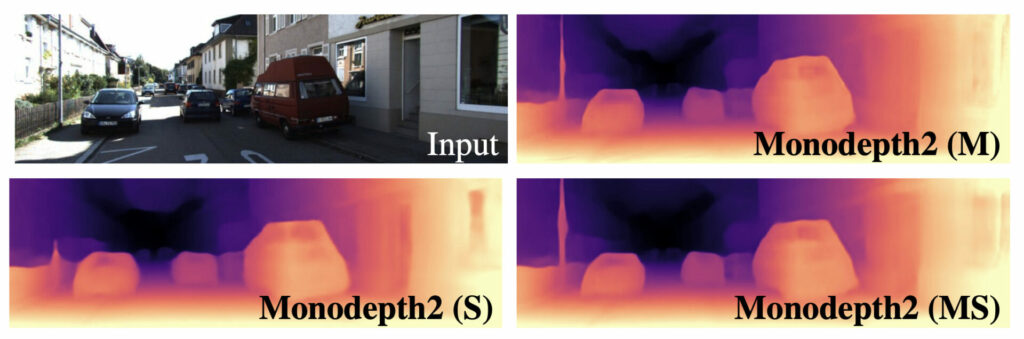

Source: Digging Into Self-Supervised Monocular Depth Estimation (Godard et al., 2018)

Let’s take a closer look at depth estimation technology. How do humans perceive the distance of objects? We see the world with two eyes, each capturing slightly different visual information. Our brains interpret this difference in visual information as depth. Similarly, in computer vision, two or more cameras can be used to capture the same scene from different angles. By comparing these images, depth can be estimated. This is known as binocular depth estimation.

However, humans can also estimate distance with just one eye. We rely on the visual size of objects and accumulated experiential knowledge to estimate distance. Computers can do the same. When estimating distance from a single 2D image, this falls under the category of monocular depth estimation.

The Principle and Structure of Marigold Using Stable Diffusion

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

Marigold focuses on monocular depth estimation, meaning it can generate a depth map from a single 2D image. To achieve this, a significant amount of information must be accumulated, including experiential data about objects in the image, segmentation between objects, separation of objects from the background, and relative depth perception based on visual size.

The Marigold model was introduced in the paper Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation, published in December 2023. Marigold is unlike traditional methods. It integrates diffusion-based models, typically used for image generation, into the field of depth estimation. The researchers’ idea was as follows:

If image generation models have already learned high-quality images from various domains uploaded to the internet, could this be applied to depth estimation?

Thus, Marigold leverages the pre-trained capabilities of Stable Diffusion. To adapt this generative model for depth estimation, fine-tuning is required.

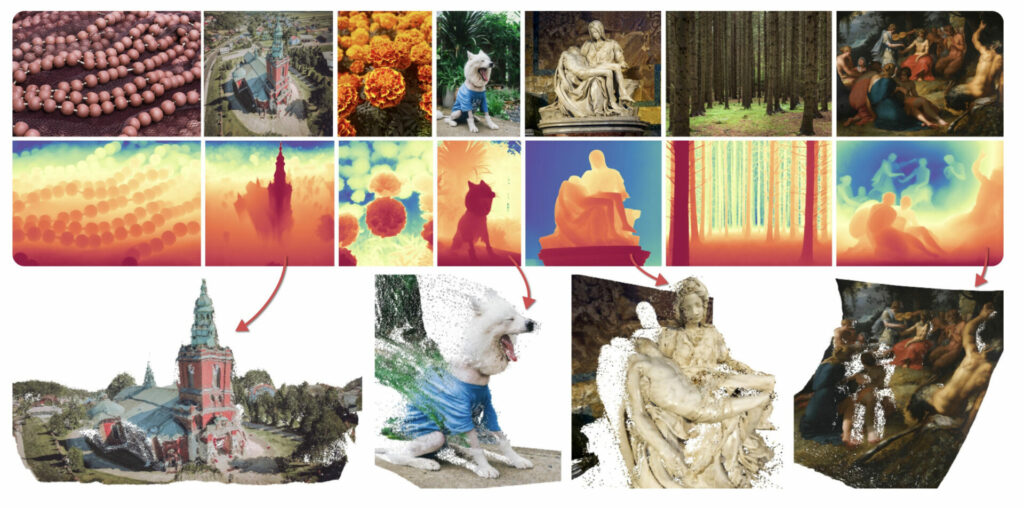

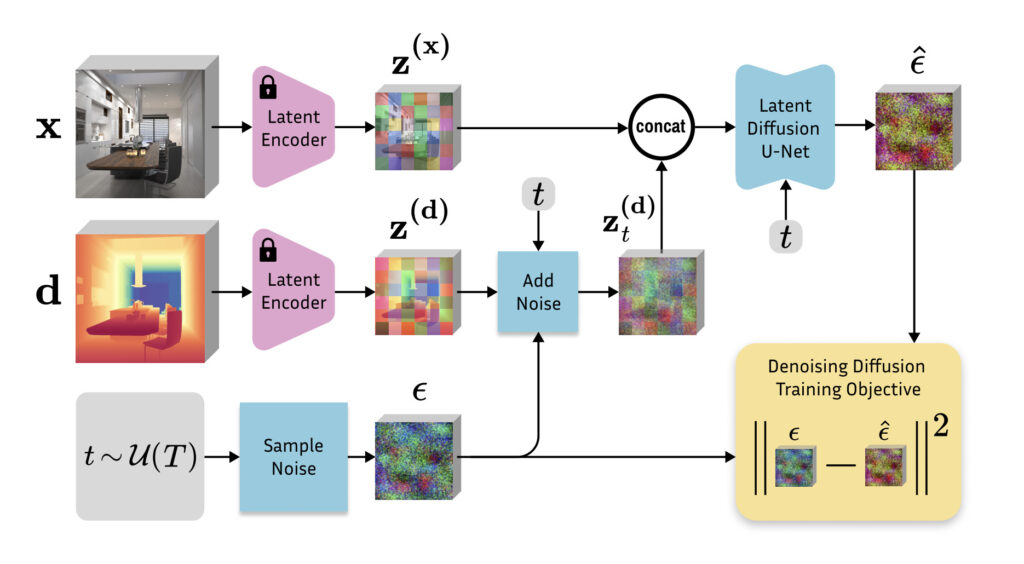

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

The fine-tuning structure is shown in the image above. Using a VAE, the real image and the depth map, as well as their respective encodings into latent space, are processed. Noise is added to the depth map and the two images are concatenated. The diffusion model then removes the noise to generate the depth map again. This process utilizes the training principles of the latent diffusion model that underpins Stable Diffusion, but specifically tailored to generate depth maps.

To enhance training performance, synthetic data is used. Rather than relying on datasets with real depth values, synthetic data is preferred due to the physical limitations that can reduce the accuracy of real data.

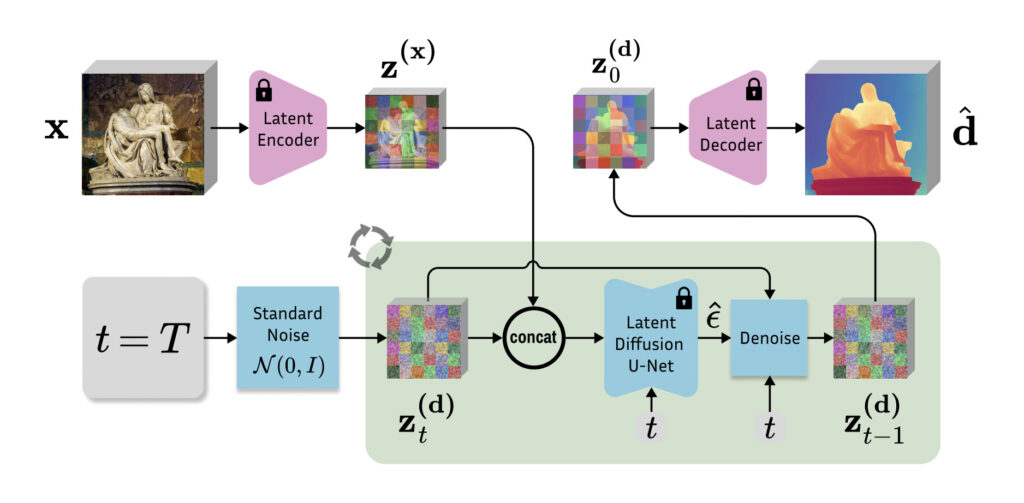

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

Once fine-tuning is complete, the inference structure follows a similar process. Noise is added and removed from the original image, and the final image is decoded to produce a high-resolution depth map.

Marigold has achieved SOTA results in the field of depth estimation and excels in zero-shot performance. This means it produces impressive results even on previously unseen data. The generated depth maps accurately delineate object boundaries within images and align well with human intuition.

As hinted by the term “repurposing” in the paper’s title, this work demonstrates how the concept behind diffusion models can be applied to different tasks. This suggests that advancements in one model can be leveraged for various purposes in other fields. Diffusion models, known for their strong performance in image generation, are also being explored for applications in text generation. Even if these initial attempts don’t immediately yield significant results, the long-term impact of one model’s research can significantly influence progress in other domains.